Flexible Processing of Big Earth Data over High Performance Computing Architectures

BIGEARTH is a CDI-STAR project, funded by the Romanian Space Agency (ROSA), for 2013-2016.

Project Website: http://cgis.utcluj.ro/bigearth

The Earth Observation data repositories increasing dramatically by several terabytes each day become a big issue for organizations. The costs of data management exceed rapidly the value of data. The administration of the storage capacity of big datasets, access policy, data governance, protection, searching, fetching, and complex processing require high costs that force the organization to search for solutions to balance the cost and value of data. Data can create value only when it is used and the data protection has to be oriented toward allowing innovation, which sometimes depends on creative people achieving unexpected valuable results. It has to maintain equilibrium between possibility of value creation and the risk of exposing data. The most efficient way of supporting innovation is by using big data through competitive applications that reveal and maximise the value of processed data. Sometimes keeping annotations and knowledge extracted from initial data is more efficient and productive than keeping the huge raw data, even if the possibility to extract later new knowledge by more advanced processing will be lost.

The BIGEARTH project considers mainly the huge data of EO images, concerning on the possibility to reveal knowledge through a flexible and adaptive manner. The users can describe and experiment themselves different complex algorithms through analytics in order to valorise data. The analytics uses descriptive and predictive models to gain valuable knowledge and information from data analysis. The BIGEARTH project develops and experiments techniques and methodologies to develop and execute analytics in a very flexible and interactive manner. The flexible description of processing has impact on execution performance, user’s access to simple and complex processing algorithms, process scheduling, and access to data, knowledge, and information.

Possible solutions for advanced processing of big EO data are given by the high performance platforms such as Grid, Cloud, Multicore, and Cluster. These solutions are quite complex, some of them involving not only the interoperability of scientific applications with different parallel and distributed infrastructures but also the interoperability and the coexistence of these heterogeneous software and hardware platforms. With platforms becoming more complex and heterogeneous, the developing of the applications is even harder and the efficient mapping of these applications to a suitable and optimum platform, working on huge distributed data repositories, is challenging and complex as well, even by using specialized software services. From the user point of view, an optimum environment gives acceptable execution times, offers a high level of usability by hiding the complexity of computing infrastructure, and an open accessibility and control to application entities and functionality. Meanwhile, the developer requires support for good interoperability between platforms, service oriented architectures, high scalability, transparent data access, simple and efficient security policies, and optimum management of processes and resources.

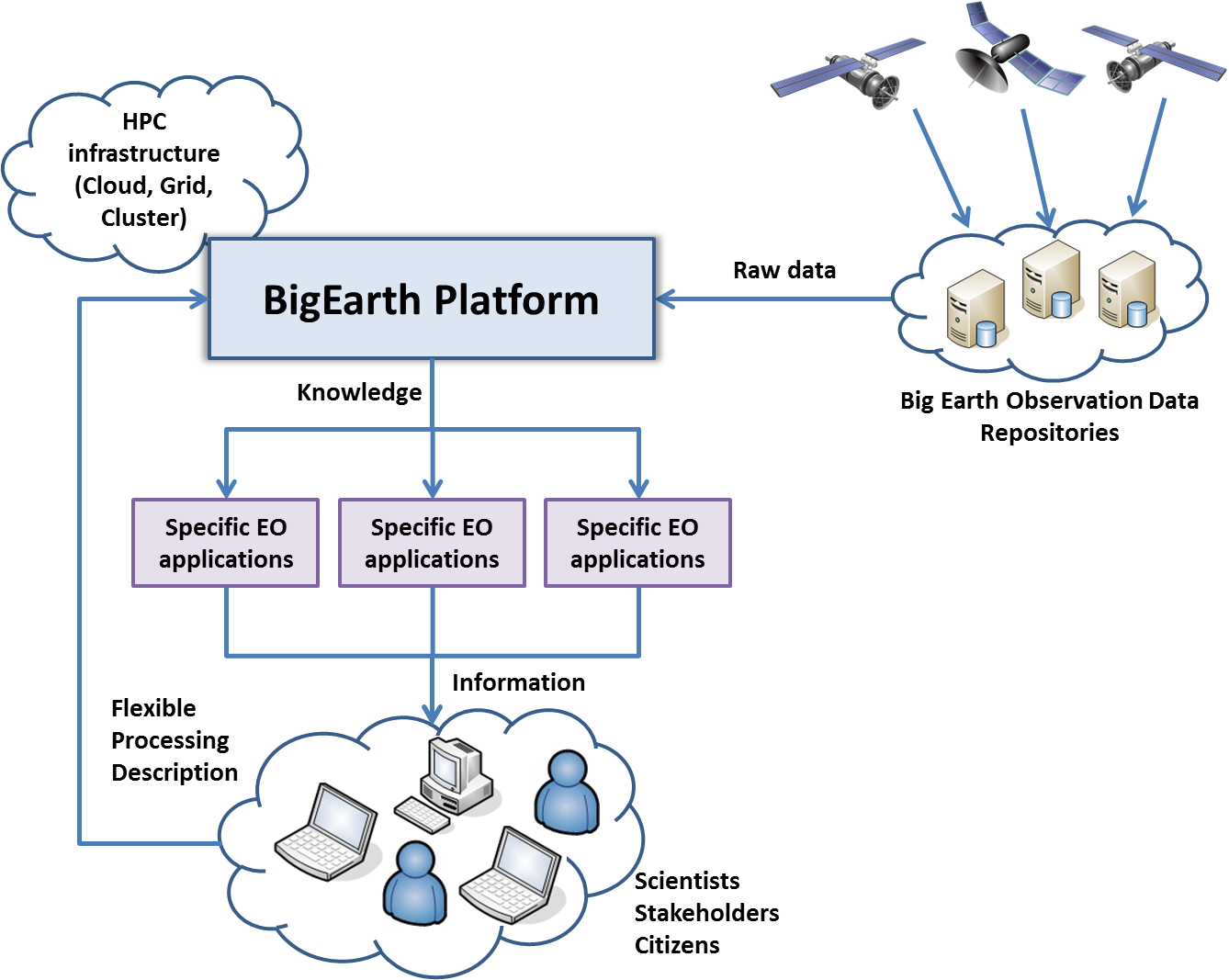

Figure 1. Conceptual BIGEARTH system architecture.

The BIGEARTH project concerns mainly with huge data of EO images, exploring the possibility to reveal knowledge through a very flexible and adaptive manner. The users can describe and experiment themselves different complex algorithms in order to valorise data (Figure 1).

The BIGEARTH project concerns with the following main objectives:

- Flexible description of processing for knowledge extraction from big Earth Observation (EO) data

- HPC oriented solutions for adaptive and portable processing of EO analytics

- EO data processing interoperability based on OGC standard services

- EO oriented development methodologies based on flexible and adaptive complex processing

- Use case development and experimental validation for representative EO use cases